Medicine

How can we advance medical care?

The success of medical treatments depends on the individual expertise of the clinicians. With computer- and robotic-assistance in medical practices based on expert knowledge and human-machine-interaction CeTI tackles key challenges, limitations as well as ongoing work for next-generation surgery. The goal of U1 is to develop a context-aware, real-time medical assistant with Human-in-the-Loop. We aim for enhanced medical care and medical training environments by operating an international initiative with leading experts in the field.

Surgical Training

In order to ensure high-quality patient care, it is crucial to train medical personal effectively and efficiently. This especially holds for challenging surgical techniques such as laparoscopic or robot-assisted surgery, or for critical scenarios such as patient resuscitation. Laparoscopic surgery is a surgical procedure to examine the abdomen in which a small camera with a thin tube is passed through a small incision in the abdominal skin. It offers many benefits to patients, but it is difficult to learn and perform due to the cumbersome use of instruments and loss of depth perception. For this reason, we investigate how to enhance conventional medical training by means of modern technology. In particular, we use a variety of sensor modalities to perceive the physician-in-training’s activities and novel machine learning algorithms to analyze the collected sensor data. Our focus is the development of smart algorithms to provide automatic constructive feedback to the novice medical personal along with an automatic objective assessment of their skill level.

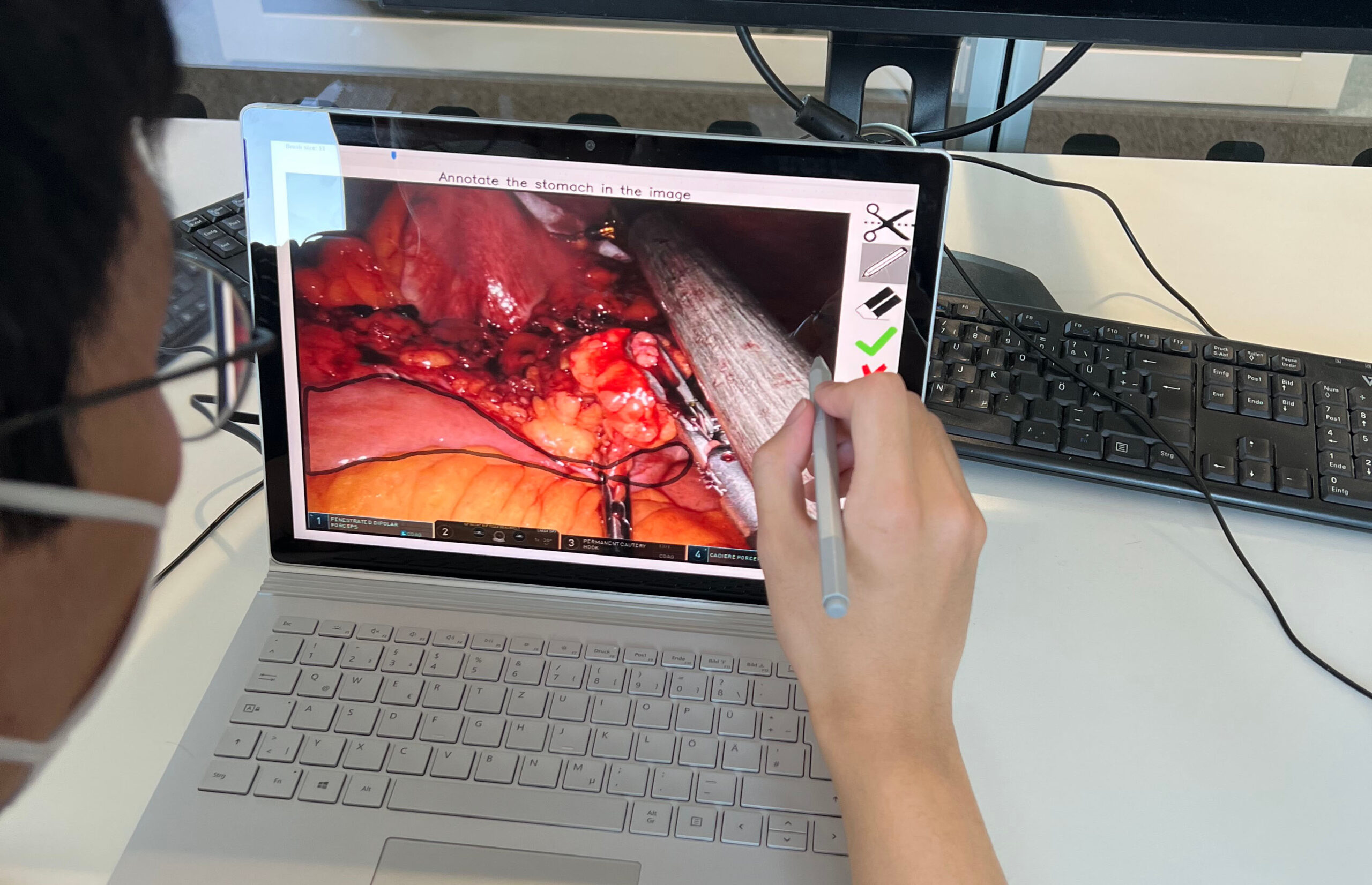

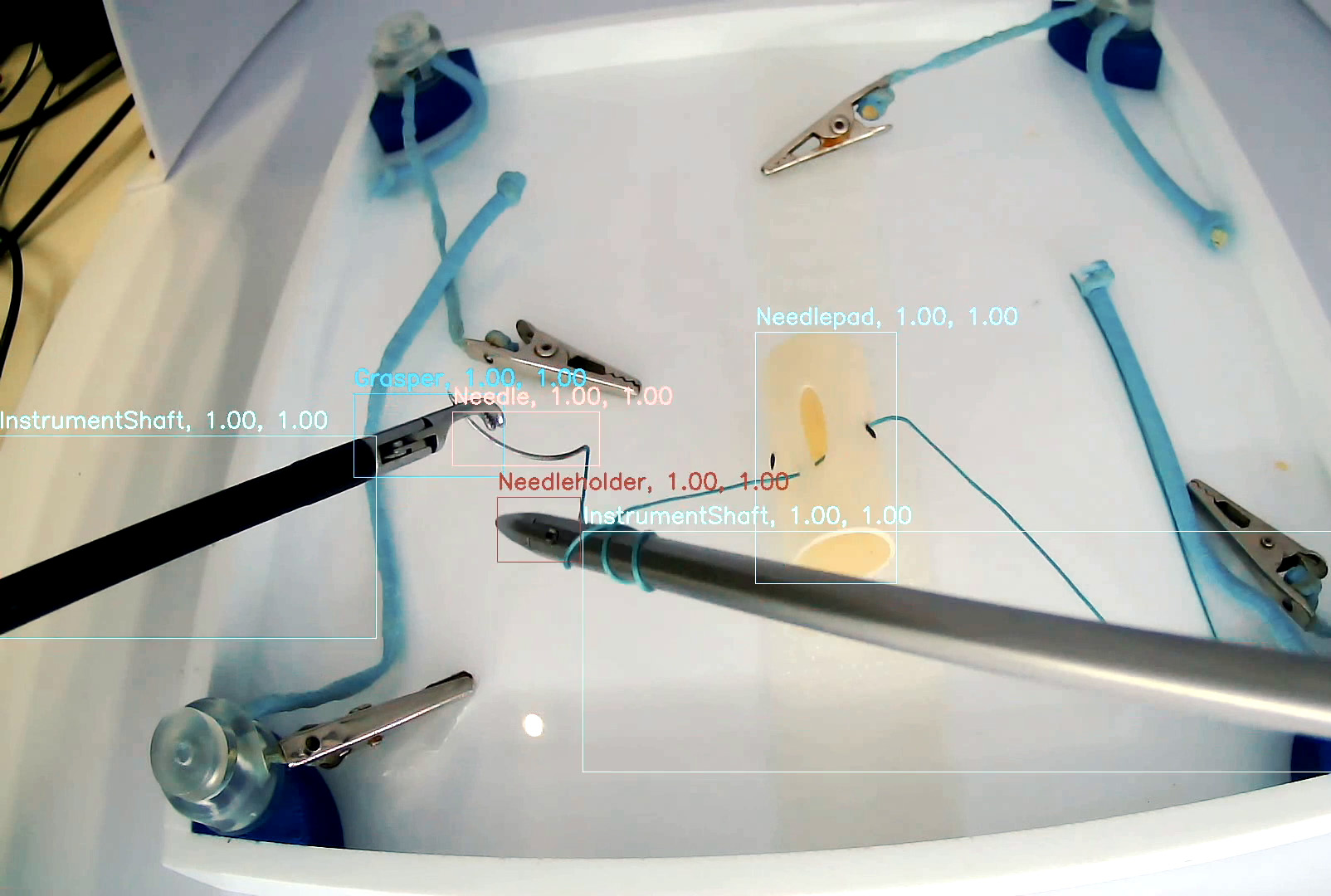

AI-assisted Surgical Training

Training for keyhole surgery (minimal invasive surgery like laparoscopy) is complex and the learning success is not yet objectively measured. One prototype has already been built for the purpose of optimizing surgical traning which has delivered promising results in small user studies. The prototype uses fast machine-learning-based scene analysis which analyzes learning progress and compares with experts. Therefore a large dataset was collected that was used to generate haptic feedback via the instrument based on forces and instrument visibilty.

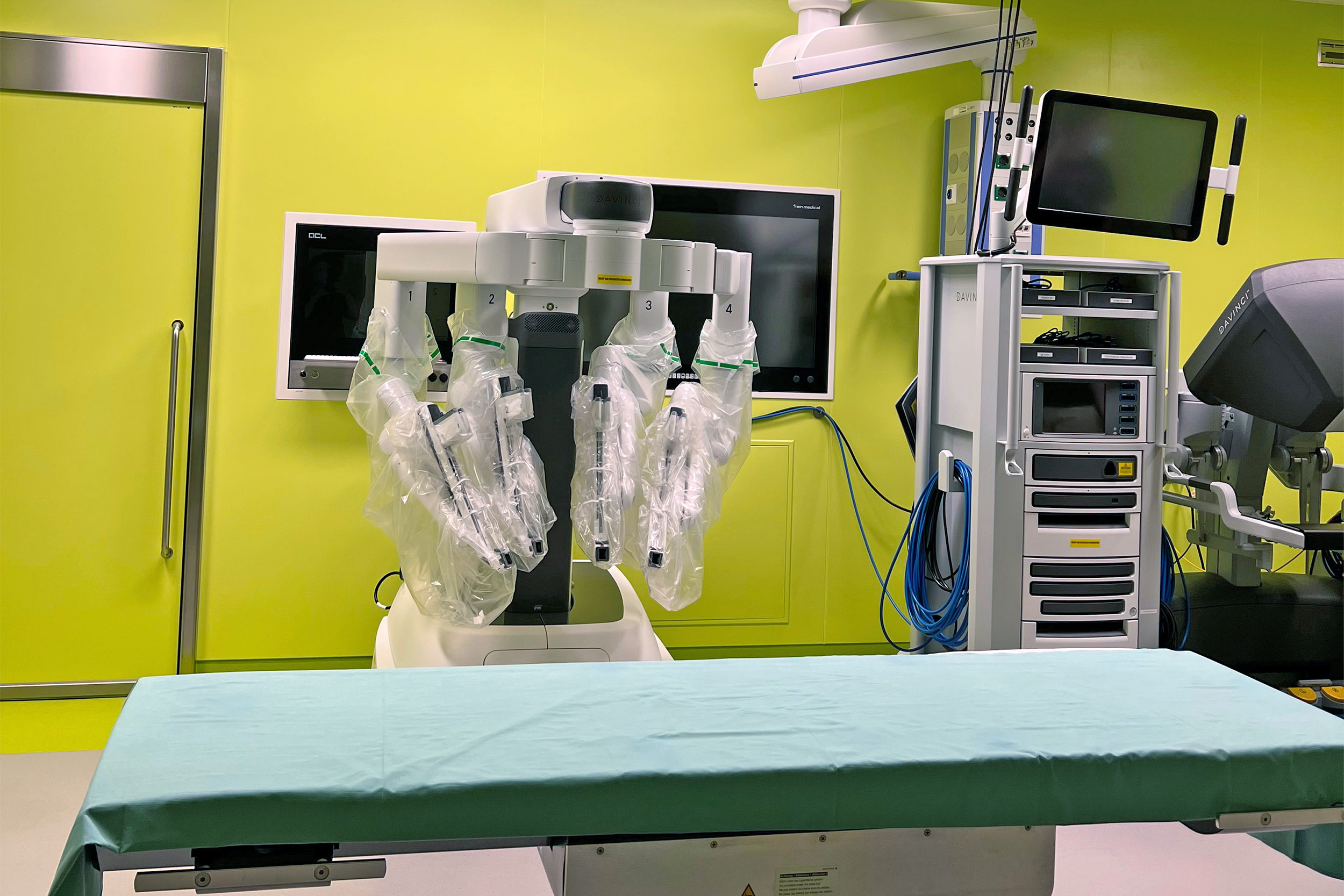

Robot-Assisted Surgery

This objective focuses on a real-time application of medical skills for computer- and robotic-assistance. The goal is to develop real-time methods which analyse sensor data in the operating room to provide context-aware assistance to the surgical team. Robot-assisted surgery provides many advantages for surgeons, such as stereo vision, improved instrument control and better ergonomic setup. The research into robot-assisted surgery focuses on: a testbed for remote aid for laparoscopic surgery; future network technologies to control surgical robots over long-distance and intermittent connections; novel methods for autonomous camera navigation; integrate innovative devices and sensors (data-glove, VR-headsets, force sensors) into the surgical workflow. This requires real-time control and low-latency communication networks, online analysis and knowledge-based interpretation of sensor data with machine-learning methods.

EndoMersion

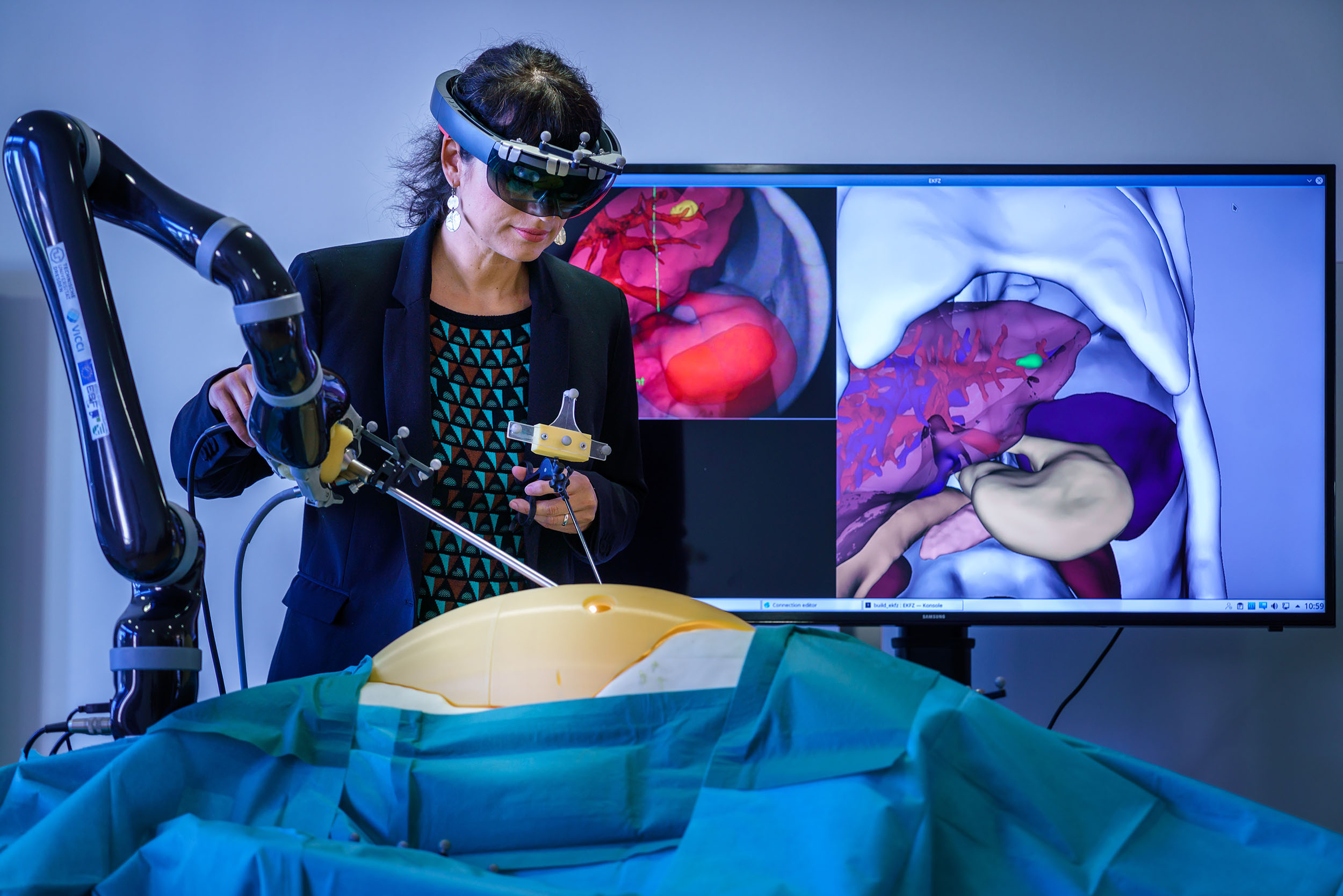

The demonstrator EndoMersion is an immersive robotic laparoscope guidance with 3D perception. The goal here is to provide computer-assisted camera guidance during keyhole surgery using patient and sensor data. The navigation is based on Augmented Reality and Artificial Intelligence. We acquire/ generate/ annotate large data sets of real surgical videos with the surgical department VTG which are processed by a neural net to create realistic simulated environments showing, for example, optimal cutting lines to protect nerves. The methods are being developed to bridge the gap between robotics, sensors, and data science in surgery.

CoBot

The CoBot assistance system is to be used for tumor operations in the rectum. The device relieves the surgeon of the direct holding and moving of instruments and translates larger hand movements, which the surgeon executes via two joystick-like handles, into tiny tremor-free incisions. Since surgeons depend on visual information, camera images of the laparoscope are shown with additional information displayed at the right time, such as the location of important nerves. However, the surgeon makes the decision himself at any time. The system only supports him, similar to a navigation system in a car.

Collaborative Research Projects

The operating room of tomorrow requires different technologies that are being tested in different research projects that complement and build on each other.

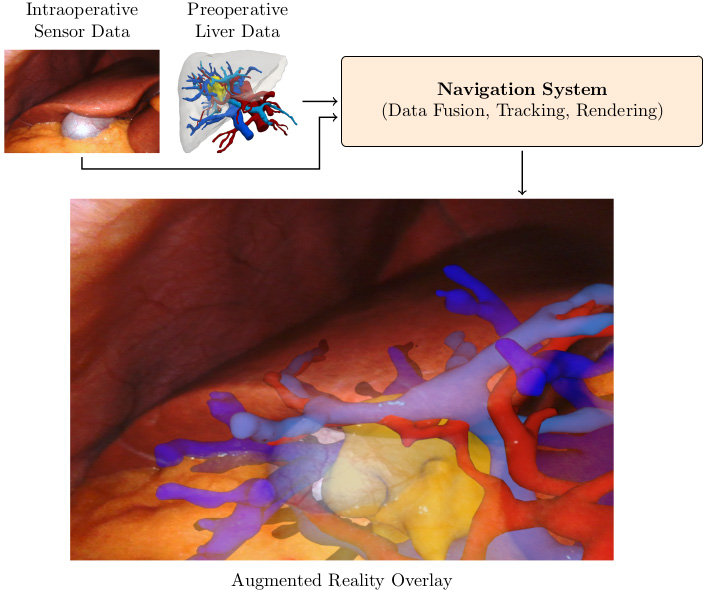

Navigation

The purpose of intraoperative navigation is to provide information of hidden risk and target structures based on preoperative patient planning data. The visualization can be done via Augmented Reality (AR), either by overlaying the information directly in the endoscopic view or by using glasses. In soft-tissue navigation, this process is substantially complicated due to the organ deformation, induced by forces applied to the organs, cutting of the tissue, different patient poses and the patient’s breathing. The estimation of organ-internal deformation and movement of risk/target structures from intraoperative sensor data (e.g. surgical video streams) and pre-operative data in real time is an open research topic. Our team is using novel machine learning methods for many of the tasks involved.

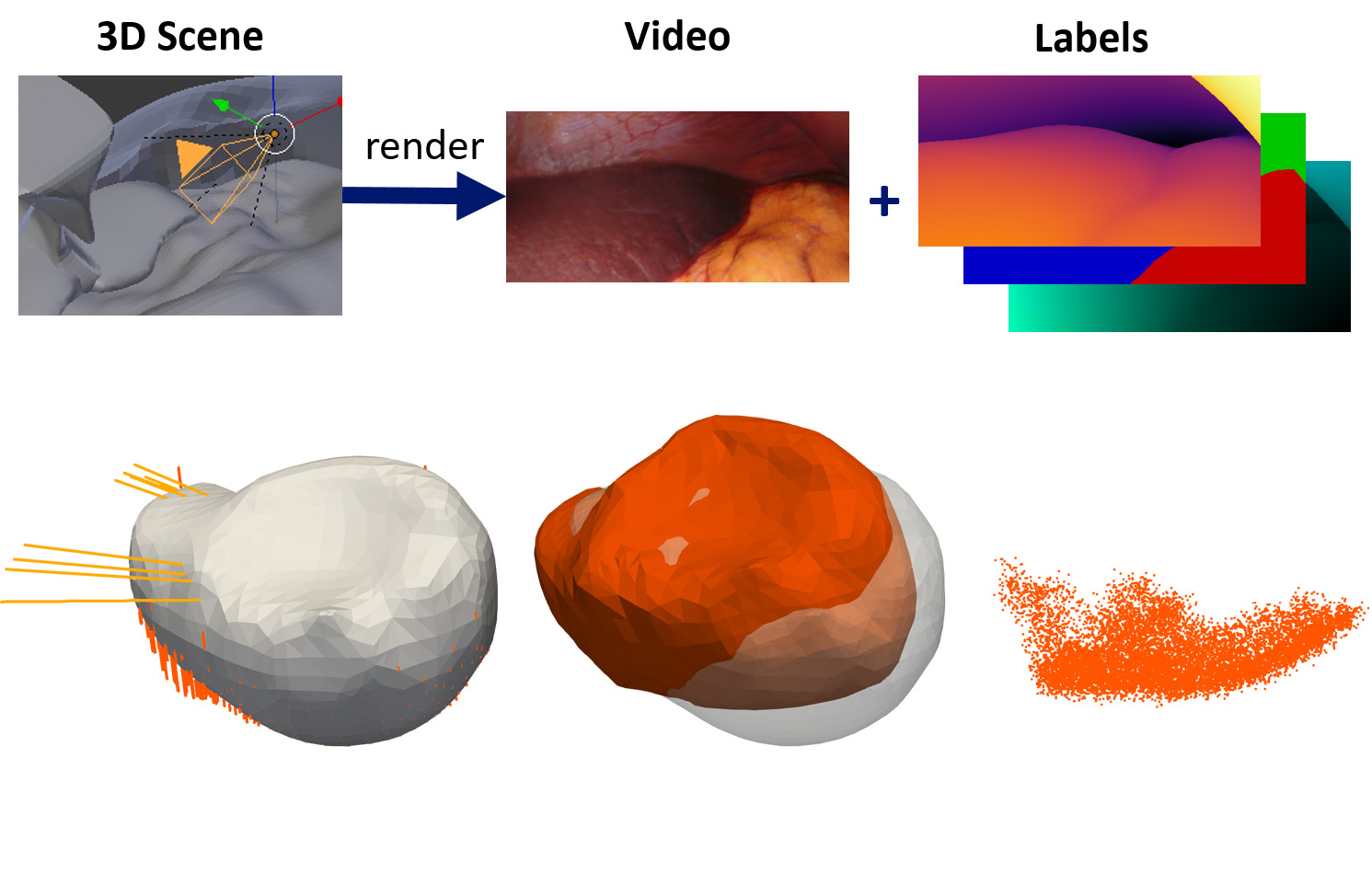

Simulation

In the surgical domain, obtaining ground-truth data for computer vision tasks is almost always a major bottlenack. This is especially the case for ground truth which would require additional sensors (e.g. depth, camera poses, 3D information) or fine-grained annotation (e.g. semantic segmentation). To this end, the aim is to render photorealistic image and video data from simple surgical simulations (e.g. laparoscopic 3D scenes).

There are almost no public datasets available which show the liver of a single patient in multiple deformed states. To drive our machine learning algorithms for navigation, we are developing simulation pipelines to generate synthetic deformations of both real and non-real organs. We build upon physical biomechanical models to generate a vast amount of semi-realistic data which is then used to train fast and powerful neural networks.