The concept of virtual research rooms

To enable a rich and dynamic exchange of ideas among the experts from the different research areas represented in CeTI we use the concept of virtual research rooms (VRR). We foresee a cross-level hierarchy from disciplinary talent pool (TP) rooms that group all principal investigators (PI) from a single domain, over interdisciplinary key concept (K) rooms, in which experts from different disciplines co-operate on shared innovative solutions, to the use case (U) rooms.

To enable a rich and dynamic exchange of ideas among the experts from the different research areas represented in CeTI we use the concept of virtual research rooms (VRR). We foresee a cross-level hierarchy from disciplinary talent pool (TP) rooms that group all principal investigators (PI) from a single domain, over interdisciplinary key concept (K) rooms, in which experts from different disciplines co-operate on shared innovative solutions, to the use case (U) rooms.

The K rooms

The K rooms of key integrative technologies, which comprise haptic codecs (K1), intelligent networks for the tactile Internet (K2), augmented perception and interaction (K3), and human–machine co-adaptation (K4), generate input for the use-case rooms.

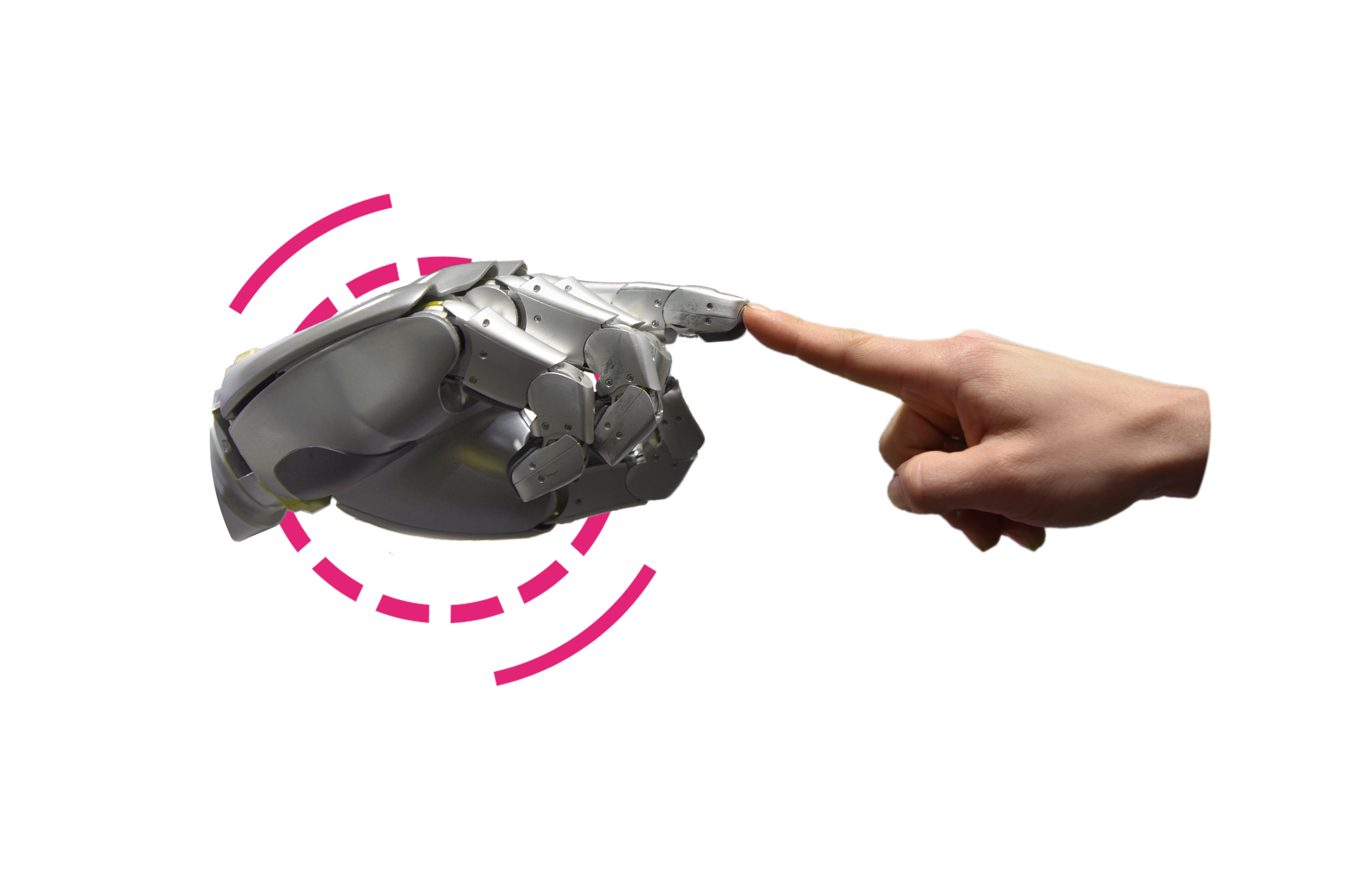

Haptic codecs

Support U rooms with efficient and low-latency visual-haptic communication solutions.

Perceptual, scalable and learning-oriented haptic codecs which take user age and expertise into account.

Pioneers in perceptual haptic codec development, chairing IEEE P1918.1.1: Haptic Codecs for the Tactile Internet.

10x–100x data reduction for massive multipoint interactions.

Intelligent networks

Support U rooms BAN, LAN, and WAN.

Networks hosting computing capabilities for human and machine models.

Leadership in 5G.

Perceive real-time communication over nation-wide SDN/NFV testbed.

Augmented perception and interaction

Develop new integrative multimodal interfaces.

Interfaces based on models of human goal-directed multisensory perception with feedback on fast time scales.

Unique combination in design, interactive media, electronics, and psychology.

Wearables for adaptive and user-friendly multimodal interfaces.

Co-adaptation

Establish human-style reasoning and develop computer-enhanced human understanding.

Hierarchical, probabilistic models for predictions at multiple time-scales; human-understandable, adaptive explanations for users.

Unique combination of neuroscience, machine learning, psychology, and human–computer interaction.

Human-inspired computational models and model-based multimodal feedback framework.